Neuromorphic

technology

Neuromorphic machine learning technology is based on spiking neural networks (SNNs) and biologically plausible learning algorithms that rely on spike-based inter-neuron communication and synaptic plasticity. These mechanisms give neuromorphic solutions advantages in energy efficiency and performance, which are most pronounced when SNNs are combined with neuromorphic processors and neuromorphic sensors.

Spiking neural networks

SNNs represent the next (third) generation of artificial neural networks that more accurately replicate the operating principles of biological neurons and synapses. SNNs are central to advancing neuromorphic computing, bringing artificial intelligence systems closer to their biological counterparts.

The key differences between SNNs and traditional

artificial neural networks are as follows

The asynchronous, event-driven operating principle of SNNs makes them extremely energy-efficient and fast, which opens up broad opportunities for use in real-world, energy-autonomous AI systems that operate in real time.

Kaspersky

Neuromorphic Platform

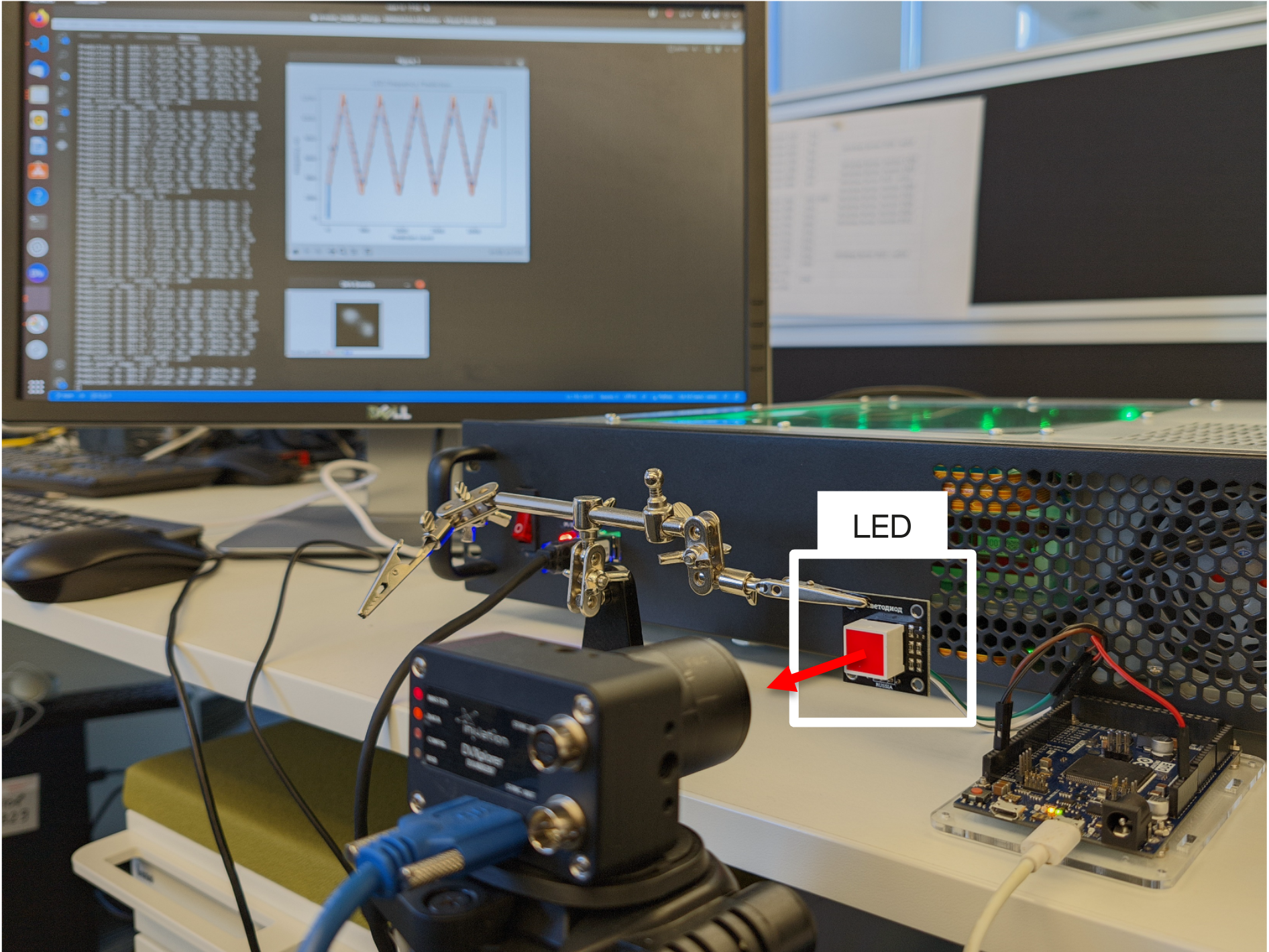

Our research team has developed the open-source neuromorphic machine learning platform, Kaspersky Neuromorphic Platform (KNP).

We use the KNP toolkit to conduct research for developing effective STDP-based methods for training SNNs, exploring new cognitive architectures, and building applied solutions based on them.